When I began exploring AI, my focus was primarily on its capabilities. I swiftly employed it for both essential and trivial purposes. This experience greatly shaped my understanding of AI. The significance of using AI optimally cannot be overstated. Some term this mastery as “prompting”, but this definition feels reductive. To say someone skilled in human interaction is just “good at asking questions” overlooks the depth of cooperating with an external party.

Mastering interaction is about understanding the other party: recognizing their strengths, weaknesses, and operational tendencies. It’s about knowing when to support and when to trust them. Our ability to relate with humans is bolstered by shared experiences: living in the same environment, consuming similar media, and even engaging in face-to-face conversations.

Many express disappointment with AI outputs, expecting factual accuracy akin to an encyclopedia. But AI, particularly models like GPT-4, operate more as reasoning engines. They rely on input variables (prompts) and internally associated data for context. The inherent limitation lies in the data and its associative power. Compared to a human the models context is extremely limited, both to language as well as to personal history.

To harness AI’s potential, we should view it as a reasoning entity. GPT-4’s intelligence, if we differentiate between intelligence and data-based associations, is exceptional. This differentiation is similar to the Mensa IQ tests which measure intelligence without requiring knowledge context.

In essence, the model’s internal data serves as foundational knowledge, enabling it to comprehend abstract input and produce contextually-rich outputs. Yet, this “general context” is curated from vast internet data, which may not always reflect reality. To maximize its effectiveness, we must provide as much specific context and information as possible.

So far we have had very limited results in comparison to effort when it comes to finetuning, instead the current paradigm seems to lean towards highly evolved general foundation models together with augmented data. OpenAI has allowed us to do this in a fairly advanced way through their embedding API, which allows us to create mathematical semantic associations to any information we want. This is off course something we can do when we are systemizing associations and creating our own AI augmented systems.

One approach I’ve adopted when it comes to the more ad-hoc interaction, that is more common in my own interactions with the AI, is having the AI ask questions to understand an issue better. Basically you ask it to take the role of an interviewer and asking it to ask any question it needs to be able to adhere to a specific intention you have. Another technique we’ve employed in building a legal application involves extensive databases on Swedish law. By segmenting and vectorizing this data, the AI can produce precise answers when queried, since we are working with large corpus of data it requires a lot of pre-defined logic, and intricate chains that go both in parallell and serialized to reason and work through all the relevant data.

Now, let’s delve into “intention.”

According to Wikipedia, the term “intention” refers to a spectrum of related concepts. Prospective intentions relate to future plans, while immediate intentions guide actions in real-time. When prompting AI, we’re often dealing with prospective intentions. However, to leverage AI’s capabilities, we can provide it with both prospective and immediate intentions, thus sharing our context for a more tailored response.

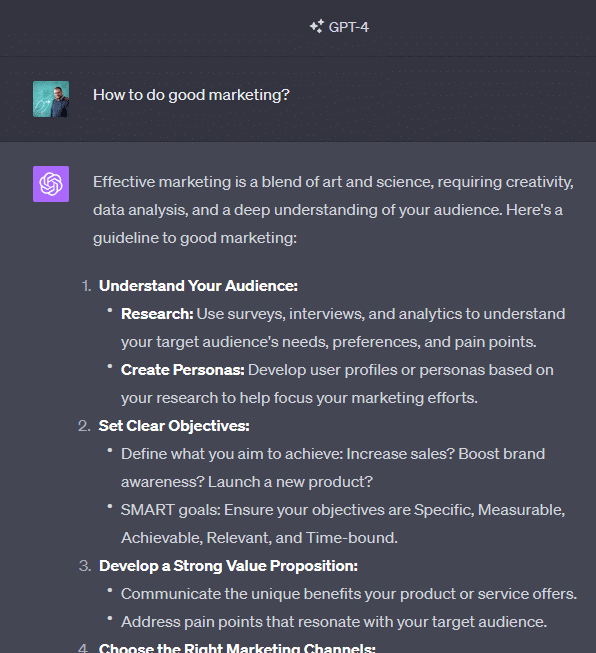

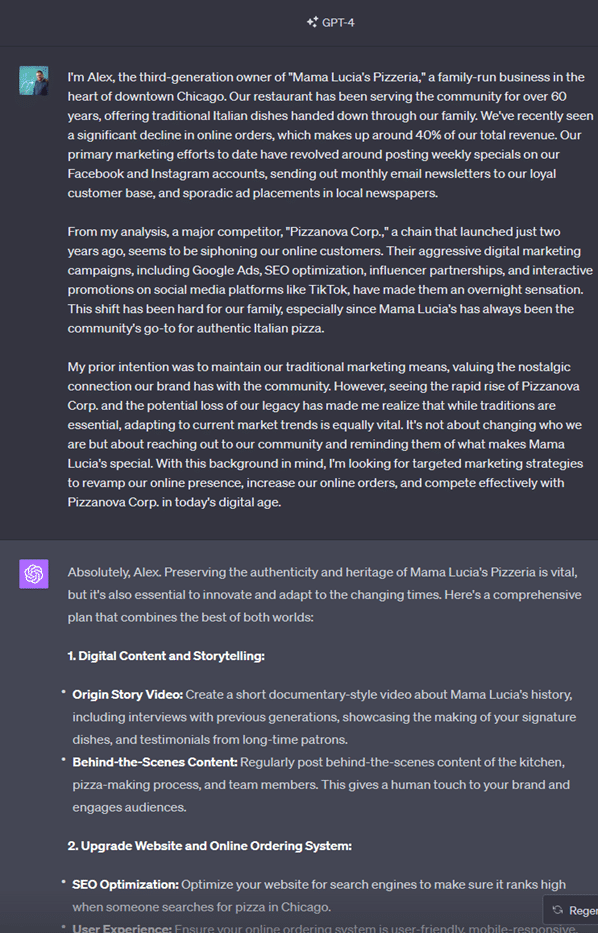

For instance, asking the generic question, “How to do good marketing?” may yield a generic list of marketing strategies. By adding more context and intention to our query, the output becomes more specific and actionable.

Sample 1:

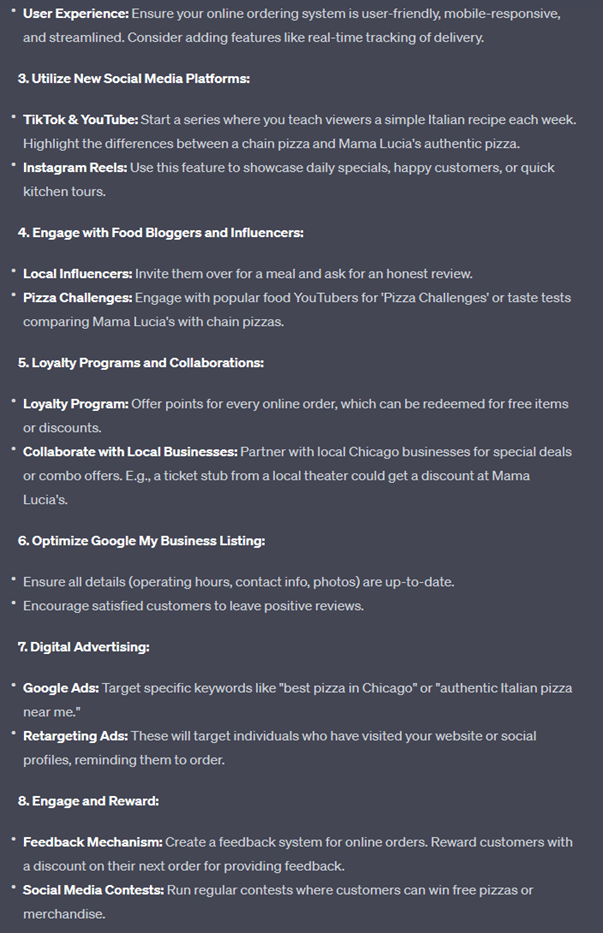

Sample 2:

The Effects of Contextual Depth in AI Interactions

As we analyze the two chat samples above, the profound impact of context and intention in AI communications becomes abundantly clear.

In the first example, where Alex delves deep into the history, challenges, and aspirations of “Mama Lucia’s Pizzeria,” the AI responds with a meticulously tailored marketing strategy. The advice provided takes into account the unique heritage of the business, the challenges posed by contemporary competitors, and the delicate balance between preserving authenticity and embracing digital advancements. This interaction showcases the potential of AI when fed with detailed context. It doesn’t just understand; it empathizes and offers solutions in sync with the user’s world.

Contrastingly, the second chat, which simply posed a general question about “good marketing,” received a generic response. Though the advice was sound and comprehensive, it lacked the personal touch and specificity the first sample exhibited. This kind of interaction can be likened to reading a textbook: informative but not necessarily catered to one’s unique situation.

Drawing from these examples, we discern that the more context and intention we embed in our interactions with AI, the richer and more relevant the outputs become. Think of AI as a reservoir of knowledge and wisdom, but like any reservoir, its usefulness is determined by the conduit’s size and directionality. A narrow, vague conduit will yield broad streams of generalized data, while a wide, well-defined one can produce precise, targeted insights.

Therefore, as we move forward in this digital age, understanding and harnessing the power of context and intention with AI can be likened to the art of conversation. And just as in any fruitful dialogue, the richness of what we convey directly influences the depth and relevance of what we receive in return. And in this case we need to compensate for every dimension and context that the AI does not have access to.

If you and your business wish to explore this further, feel free to reach out to us.

No responses yet